You've painstakingly gathered your audio and video data, from in-depth interviews and focus groups to podcasts and team meetings. Now you're facing the big challenge: how do you turn all this raw material into clear, actionable insights? The journey from a mountain of transcripts to a compelling conclusion depends entirely on your analytical approach. With so many qualitative data analysis methods to choose from, picking the right one is absolutely critical.

This decision isn't just a procedural step—it fundamentally shapes what you discover. The method you choose guides how you interact with your data, the patterns you search for, and the depth of meaning you can uncover. An ill-suited method might lead to shallow findings or, even worse, a misinterpretation of your participants' voices. This guide is here to help you avoid that.

We'll break down 10 distinct qualitative data analysis methods, from the widely-used Thematic Analysis to the highly specific Conversation Analysis. For each one, we'll explain its core purpose, practical applications, and key strengths. Our goal is to give you the confidence to select the perfect framework for your research goals, ensuring you unlock the richest possible insights from your qualitative data.

1. Thematic Analysis

Thematic analysis stands out as one of the most fundamental and flexible qualitative data analysis methods. Its main job is to help you identify, analyze, and report patterns, or "themes," within your data. This approach is incredibly effective for making sense of large volumes of text, such as transcribed interviews, focus groups, or a series of podcast episodes.

Researchers work by systematically reading through transcripts, assigning codes to excerpts that represent a particular idea. As the coding continues, these individual codes are grouped into broader, overarching themes that capture the essential meaning of the data. Popularized by psychologists Virginia Braun and Victoria Clarke, this method provides a rich, detailed, and complex account of your data.

When to Use Thematic Analysis

This method is your go-to when you need to understand a set of experiences, views, or behaviors across a dataset. For instance, a podcast network could analyze transcripts from its entire catalog to pinpoint recurring topics that resonate most with its audience. Similarly, a research team could use it to find common patterns in patient interviews to help shape healthcare practices.

How to Implement It

- Familiarize & Code: Begin by thoroughly reading your data. As you read, apply initial codes to interesting features. For example, if you're analyzing customer support call transcripts, a code might be "frustration with shipping times."

- Generate Themes: Group your initial codes into potential themes. The code "frustration with shipping times" might fit into a broader theme like "Post-Purchase Experience."

- Review & Refine: Check if your themes actually work in relation to both the coded extracts and the full dataset. Make sure they are distinct and tell a coherent story.

- Define & Name: Clearly define and name each theme. Write a detailed analysis for each one, explaining its significance and using direct quotes from your data as powerful evidence. For a deeper look at this process, check out our guide on how to analyze qualitative interview data.

2. Content Analysis

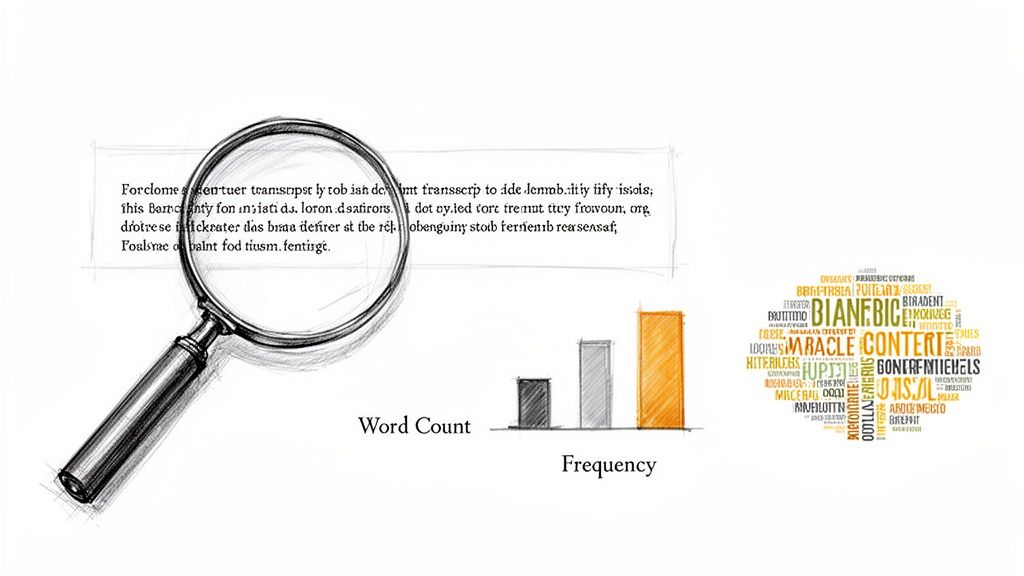

Content analysis is a systematic research method for quantifying and analyzing the presence, meanings, and relationships of specific words, themes, or concepts within text or media. It acts as a bridge between qualitative and quantitative techniques by counting and categorizing data to identify patterns. For example, you could analyze a season of podcast transcripts to count exactly how many times certain topics are mentioned.

This method transforms qualitative text from interviews, meetings, or depositions into objective, numerical data. Developed from early communication research and refined by scholars like Klaus Krippendorff, content analysis lets you systematically measure and compare communication characteristics, making it one of the more structured qualitative data analysis methods available.

When to Use Content Analysis

Content analysis is the perfect choice when you need to identify and quantify specific patterns across a large volume of text. A marketing team could use it to track brand sentiment in customer interviews by counting positive versus negative words. Likewise, a legal firm can analyze deposition transcripts to measure how often key terms relevant to a case appear.

How to Implement It

- Define Units & Categories: Decide what you'll be coding. This could be individual words, phrases, or entire concepts. Create a clear coding manual with rules for what fits into each category.

- Systematic Coding: Go through your transcripts and systematically code the data according to your predefined rules. For example, when analyzing meeting transcripts, you might code for "action item," "decision made," or "unresolved question."

- Count & Analyze: Tally the frequencies of your codes. Analyze these counts to find patterns, make comparisons, and draw conclusions. You might discover that one speaker in a focus group contributes the most ideas flagged as "innovative."

- Ensure Reliability: To make your findings trustworthy, have a second coder analyze a portion of the data (around 10-15%) using the same manual. Compare the results to ensure your coding scheme is consistent and reliable.

3. Grounded Theory

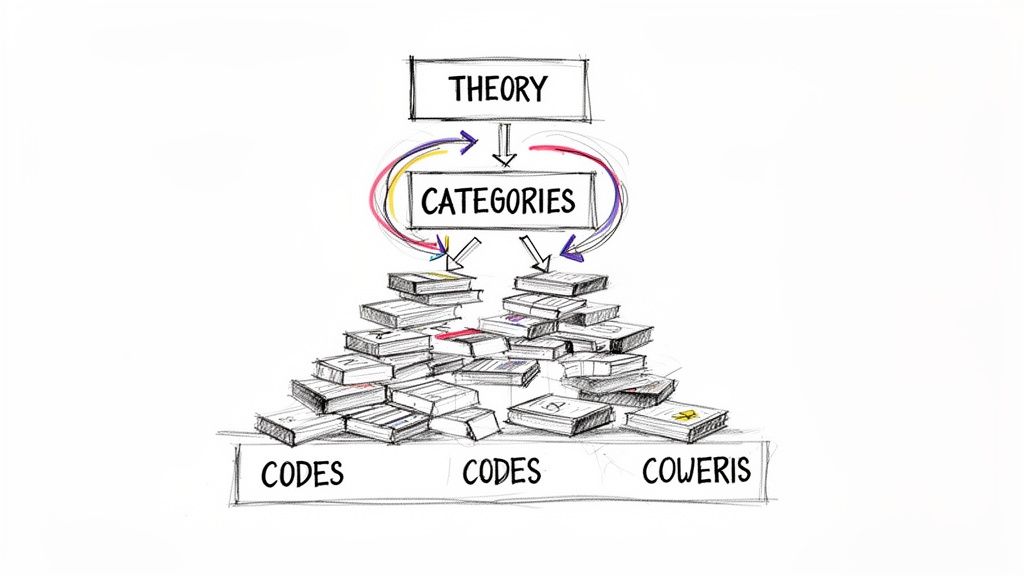

Grounded theory is one of the most rigorous qualitative data analysis methods because it involves building theories from the ground up, directly from the data itself. Unlike other methods where you might test an existing hypothesis, grounded theory is an inductive approach. Researchers develop explanatory theories by continuously comparing, coding, and analyzing data until clear patterns and relationships emerge.

This systematic process, originally developed by sociologists Barney Glaser and Anselm Strauss, is perfect for exploratory research where little is known about a topic. It allows a theory to emerge organically, rooted firmly in the experiences of the participants you're studying, whether they're customers in support calls or team members in a series of project meetings.

When to Use Grounded Theory

This method is ideal when you need to develop a brand-new theory or explanation for a process or social interaction. For instance, a business consultant could analyze meeting transcripts to build a theory about the dynamics of successful change management within a company. Similarly, content creators could use it to analyze video comments and build a model of audience engagement patterns.

How to Implement It

- Open Coding: Start by analyzing a small batch of transcripts (3-5 files) without any preconceived notions. Assign codes to concepts, actions, and events. For example, in meeting transcripts, a code might be "hesitation to share dissenting opinion."

- Axial & Selective Coding: Begin connecting your codes to form categories (axial coding) and then integrate these categories around a central core concept (selective coding). "Hesitation to share" might connect to a broader category like "Psychological Safety."

- Memo Writing: Continuously write memos to document your thoughts, ideas, and the relationships you see emerging between codes and categories. This is a crucial part of tracing how your theory develops.

- Theoretical Saturation: Keep collecting and analyzing data at the same time until new data no longer reveals new insights or properties about your core categories. This is your sign that you have a well-developed theory.

4. Discourse Analysis

Discourse analysis is a powerful qualitative data analysis method that looks at how language is used in social contexts. It goes beyond what is said to explore how it is said, focusing on the way word choice, sentence structure, and conversation patterns build meaning, power dynamics, and social identities. This method is essential for understanding the subtle messages embedded within communication.

Researchers use this approach to deconstruct transcripts from interviews, meetings, or media broadcasts to reveal underlying ideologies, assumptions, and social norms. Influenced by thinkers like Michel Foucault, this method treats language not just as a tool for communication but as a social practice that shapes our reality. For content creators, this means analyzing podcast dialogues to understand how hosts build rapport or authority with their audience.

When to Use Discourse Analysis

This method is ideal when your goal is to understand the relationship between language, power, and social context. For example, a marketing team could analyze transcripts of sales pitches to identify language that successfully builds trust and persuades clients. Similarly, educators can study classroom discourse from lecture recordings to see how communication patterns impact student engagement and learning.

How to Implement It

- Select & Prepare Data: Choose the relevant text or conversation for analysis. A highly accurate transcript is non-negotiable, as even pauses and filler words are meaningful data points. For best results, see our guide on how to transcribe interviews.

- Identify Discourse Patterns: Systematically read the transcript to identify patterns in language use. This could include rhetorical devices, recurring metaphors, or specific ways of framing a topic.

- Analyze Context: Think about the broader social, cultural, and historical context. How do these factors influence the way language is used and understood in your data?

- Interpret Findings: Connect the linguistic patterns to their social functions. Explain how the discourse constructs a particular version of reality, establishes authority, or reinforces certain beliefs. For instance, you might analyze how a speaker’s consistent use of inclusive language fosters a sense of community.

5. Phenomenological Analysis

Phenomenological analysis seeks to understand the very essence of a lived experience from the perspective of those who have lived it. Instead of finding patterns across data, this qualitative data analysis method dives deep into individual or shared human experiences to grasp their core meaning. It's about describing, not explaining, the "what" and "how" of a particular phenomenon.

Originating from philosophy with figures like Edmund Husserl, this approach involves analyzing rich narrative data, such as in-depth interviews or personal stories. The goal is to set aside preconceived notions (a process called "bracketing") to truly understand how individuals make sense of their world, providing a profound look into subjective reality.

When to Use Phenomenological Analysis

This method is perfect when your goal is to understand the subjective, emotional, and perceptual aspects of an experience. For instance, a healthcare organization could use it to understand the lived experience of chronic illness by analyzing patient narratives. Likewise, a product team could analyze user interviews to grasp the essence of the "first-time user experience" with a new software feature, capturing frustrations, delights, and moments of confusion.

How to Implement It

- Immerse & Bracket: Start by repeatedly reading your transcripts to get a holistic feel for the participant's story. Critically, you must document your own assumptions and biases in a memo to "bracket" them from the analysis.

- Identify Significant Statements: Highlight statements or phrases that directly relate to the experience being studied. These key quotes form the foundation of your analysis.

- Formulate Meanings: Group the significant statements into clusters of meaning, often called "meaning units." For example, statements about navigating an app's interface could be grouped under a meaning unit like "Struggle for Control."

- Synthesize the Essence: Integrate these meaning units into a rich, detailed description of the experience's essential structure. This final narrative should articulate the core components that define the phenomenon for your participants.

6. Narrative Analysis

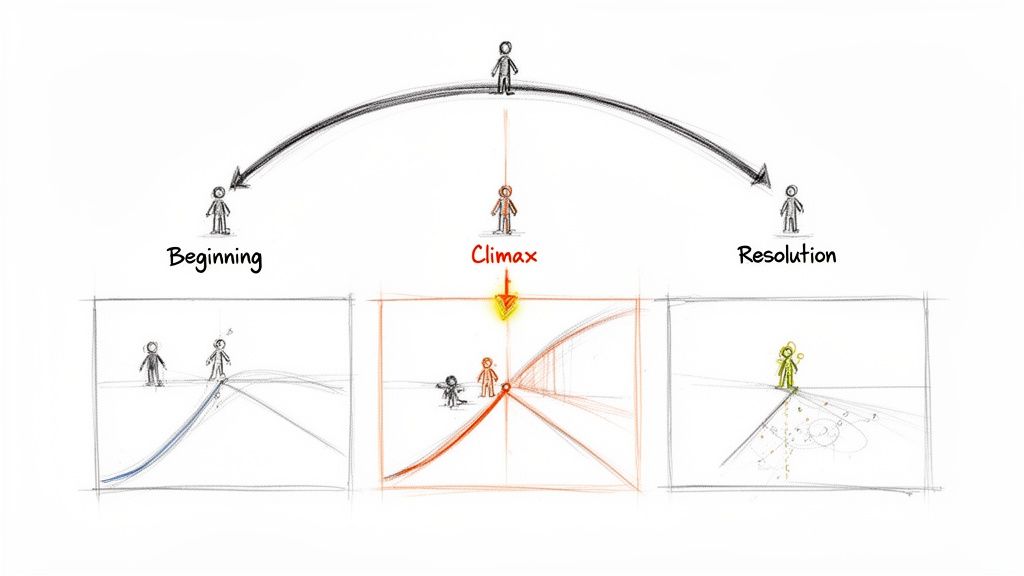

Narrative analysis is a fascinating qualitative data analysis method that focuses on the stories people tell. Instead of breaking down data into thematic categories, this approach examines how individuals construct, sequence, and find meaning in their experiences. It analyzes the narrative itself: the plot, characters, setting, and overarching message, treating the story as the unit of analysis.

This method is particularly valuable for understanding personal experiences, cultural values, and organizational dynamics through the lens of storytelling. Popularized by foundational thinkers like Jerome Bruner and Margaret Somers, narrative analysis helps reveal the intricate ways people make sense of their world and communicate that understanding to others.

When to Use Narrative Analysis

Use this method when your goal is to understand how people frame and interpret events through storytelling. It’s perfect for analyzing customer journey testimonials, employee interviews about organizational change, or podcast episodes that rely heavily on personal accounts. The modern landscape also presents new forms of narrative content; for instance, examining the process of creating AI video content from Reddit stories showcases how digital narratives are transformed and consumed.

How to Implement It

- Identify & Isolate Narratives: Read through your transcripts to identify distinct stories. Unlike other methods, it’s crucial to keep the narrative whole rather than fragmenting it with codes.

- Analyze Narrative Structure: Map out the key components of each story: the beginning (exposition), rising action, climax, falling action, and resolution. Use timestamps from your original audio to pinpoint key turning points.

- Examine Content & Context: Analyze what is said, what is left unsaid, and how the speaker positions themselves and others within the story. Consider how the broader context influences the telling of the narrative.

- Develop an Interpretation: Synthesize your findings to build an interpretation of what the stories reveal. Focus on how the narrative construction creates a particular meaning or achieves a specific purpose for the storyteller.

7. Framework Analysis (Qualitative Content Mapping)

Framework analysis is a highly structured, matrix-based method for organizing and making sense of qualitative data. It involves using a predefined thematic framework to systematically categorize and compare information across different cases, such as multiple interviews or focus groups. Popularized by Jane Ritchie and Liz Spencer, this method is valued for its transparent and systematic process.

The core of this technique is the creation of a matrix, where columns represent codes or themes and rows represent individual participants or cases. This grid-like structure allows researchers to easily summarize data and identify patterns, both within a single case and across the entire dataset. It provides a clear, auditable trail from the original raw data to the final conclusions.

When to Use Framework Analysis

This method is especially powerful in applied or policy-focused research where you have specific questions and a relatively structured dataset. For example, a government agency could use it to evaluate feedback from various stakeholder meetings on a new policy, mapping responses to predefined areas of interest like "economic impact" or "community concerns." It's also ideal for legal teams organizing testimony transcripts by key case elements.

How to Implement It

- Develop a Framework: Begin by identifying key themes and concepts to create a working analytical framework. This can be based on your research questions or initial impressions from the data.

- Code the Data: Systematically read through your transcripts and apply codes from your framework to relevant excerpts.

- Create the Matrix: Build a spreadsheet or matrix. Create a column for each code and a row for each participant.

- Summarize & Chart: Populate the matrix by summarizing the data from each transcript that corresponds to each code. This charting process helps you visualize patterns, contradictions, and connections across the dataset efficiently.

8. Conversation Analysis

Conversation Analysis (CA) is a meticulous qualitative data analysis method focused on the structure and mechanics of human interaction. It treats talk not just as a carrier of information, but as a social activity with its own rules and order. Researchers using CA examine the fine-grained details of conversations, such as turn-taking, pauses, overlaps, and repairs, to understand how participants collaboratively construct meaning.

Developed by sociologists Harvey Sacks, Emanuel Schegloff, and Gail Jefferson, this approach analyzes how interaction is sequentially organized. It moves beyond what is said to how it is said, revealing the underlying social order in everything from a casual chat to a formal business meeting. This method is incredibly powerful for uncovering the subtle, often unconscious, patterns that govern our daily communication.

When to Use Conversation Analysis

This method is perfect when your research question centers on the mechanics of interaction itself. For example, a customer service organization could analyze call center transcripts to pinpoint exactly where communication breaks down and use those findings for training. Healthcare researchers might examine patient-provider dialogues to improve communication and diagnostic accuracy. It's also invaluable for legal professionals scrutinizing witness testimony for hesitation patterns that reveal uncertainty.

How to Implement It

- Create Detailed Transcripts: Start with a high-fidelity transcript. For CA, this means capturing not just words but also filler words ("um," "uh"), pauses (measured in tenths of a second), overlaps, and even intonation. Jeffersonian transcription notation is the standard for this level of detail.

- Identify Sequential Patterns: Focus on specific conversational phenomena. Analyze turn-taking sequences, how questions are asked and answered, or how participants repair misunderstandings. The goal is to identify recurring, systematic patterns in the interaction.

- Analyze a Single Case: Begin with a close analysis of a single, clear example of the phenomenon you are studying. Describe how the participants orient to the rules and structures of the conversation in that specific instance.

- Build a Collection: After analyzing a single case, gather other instances of the same phenomenon from your dataset. This helps demonstrate that the pattern is not an isolated event but a systematic feature of the interaction.

9. Interpretive Phenomenological Analysis (IPA)

Interpretive Phenomenological Analysis (IPA) is a deep, immersive approach to understanding how people make sense of their major life experiences. It combines principles from phenomenology (the study of experience) and hermeneutics (the theory of interpretation), focusing on the detailed examination of personal accounts. The researcher's role is not just to report but to interpret the participant's meaning-making process.

Developed by Jonathan Smith, IPA is an idiographic method, meaning it begins with a meticulous analysis of a single case before moving to others. This focus makes it one of the most powerful qualitative data analysis methods for exploring subjective lived experiences, like understanding a patient's journey with a chronic illness or a founder's perspective on a business failure.

When to Use IPA

IPA is best suited for small-scale, in-depth studies where the goal is to gain a rich understanding of a specific phenomenon from the perspective of those who have lived it. Use it when you need to explore complex, ambiguous, or emotionally significant topics. For instance, a researcher could use IPA to analyze transcribed interviews with first-generation college students to understand their transition to university life.

How to Implement It

- In-Depth Interviews & Transcription: Conduct semi-structured, in-depth interviews to encourage rich narratives. Use a service like MeowTxt to get accurate, verbatim transcripts, which are crucial for the detailed analysis IPA requires.

- Initial Annotation: Read through a single transcript multiple times. Make initial notes and comments in the margins, focusing on descriptive, linguistic, and conceptual observations.

- Develop Emergent Themes: Transform your initial notes into emergent themes that capture the essential psychological meaning of the participant's account. Group these themes hierarchically.

- Cross-Case Analysis: Repeat the process for each case. Once you have analyzed a few cases individually, look for patterns and connections across them to develop a set of superordinate themes for the entire group.

10. Mixed Methods Integration (Qual + Quant from Transcripts)

Mixed methods integration combines the depth of qualitative analysis with the statistical power of quantitative metrics. Instead of treating qualitative and quantitative data as separate, this approach uses them to enrich and validate one another. It involves analyzing textual data like interview transcripts for themes while simultaneously extracting numerical data such as word counts, sentiment scores, or frequency of specific terms.

This powerful technique, championed by researchers like John Creswell, provides a more comprehensive and robust understanding than a single method could alone. By triangulating findings, you can build a more convincing and multi-dimensional narrative. For instance, you can identify a key theme in your qualitative data and then use quantitative metrics to demonstrate its prevalence or impact across the entire dataset.

When to Use Mixed Methods Integration

This approach is perfect when you need to add a layer of objective validation to your qualitative findings or explain the "why" behind quantitative trends. A podcast network could combine qualitative theme analysis of popular episodes with quantitative listener engagement data to understand what topics truly drive retention. Similarly, a market research team can integrate qualitative feedback themes from customer interviews with quantitative survey ratings to build a complete picture of customer satisfaction.

How to Implement It

- Export and Prepare Data: Use a transcription service that allows you to export transcripts in multiple formats. A DOCX file is ideal for qualitative coding, while a CSV export is perfect for running quantitative analysis. You can learn more about finding the right tools with this guide on transcription software for interviews.

- Conduct Parallel Analysis: Perform your qualitative analysis (like thematic or narrative) and your quantitative analysis (like frequency counts or sentiment scoring) separately at first.

- Integrate and Interpret: Correlate your findings. Does a frequently mentioned topic in your transcripts also align with higher engagement metrics? Do negative sentiment scores correspond with specific themes of customer frustration?

- Visualize and Report: Create visual dashboards that combine your narrative insights with charts and graphs. This integrated reporting makes your conclusions more compelling and easier for stakeholders to understand.

Comparison of 10 Qualitative Data Analysis Methods

| Method | Implementation complexity 🔄 | Resource requirements ⚡ | Expected outcomes 📊⭐ | Ideal use cases 💡 | Key advantages ⭐ |

|---|---|---|---|---|---|

| Thematic Analysis | 🔄 Low–Moderate — systematic six-phase coding | ⚡ Moderate — time for coding; scalable with software | 📊 Clear, interpretable themes; ⭐⭐⭐⭐ | Podcasts, lectures, interview series | Accessible, scalable, cost‑effective |

| Content Analysis | 🔄 Moderate — requires predefined coding scheme | ⚡ Moderate–High — manual coding or automated preprocessing | 📊 Quantifiable, comparable metrics; ⭐⭐⭐⭐ | Legal review, brand mentions, topic frequency studies | Objective, replicable, presentation‑ready |

| Grounded Theory | 🔄 Very High — iterative open/axial/selective coding | ⚡ Very High — large data, extensive memoing | 📊 Emergent theoretical frameworks; ⭐⭐⭐⭐⭐ | Exploratory research, new phenomena, theory building | Produces novel, data‑driven theories |

| Discourse Analysis | 🔄 High — interpretive, language‑focused work | ⚡ High — expert linguistic/theoretical input | 📊 Deep rhetorical and power analyses; ⭐⭐⭐ | Media critique, persuasion, identity/power studies | Reveals ideologies, nuance in language use |

| Phenomenological Analysis | 🔄 High — bracketing and thick description required | ⚡ Moderate–High — in‑depth transcript reading | 📊 Rich descriptions of lived experience; ⭐⭐⭐⭐ | Patient narratives, user experience, testimonials | Deep empathy, validates subjective perspectives |

| Narrative Analysis | 🔄 Moderate–High — analyzes story structure and sequencing | ⚡ Moderate — close reading, context preservation | 📊 Insight into story arcs and meaning; ⭐⭐⭐ | Documentaries, storytelling podcasts, customer journeys | Captures how people construct meaning through stories |

| Framework Analysis | 🔄 Moderate — matrix‑based, predefined framework | ⚡ Moderate — framework setup, spreadsheet/matrix tools | 📊 Structured, comparable displays; ⭐⭐⭐⭐ | Policy, healthcare evaluations, program feedback | Transparent, organized, team‑friendly |

| Conversation Analysis | 🔄 Very High — micro‑level turn‑by‑turn analysis | ⚡ Very High — detailed transcription/notation required | 📊 Micro‑interaction patterns; ⭐⭐⭐⭐ | Call centers, clinician–patient interactions, meeting dynamics | Reveals interaction mechanics; highly replicable |

| Interpretive Phenomenological Analysis (IPA) | 🔄 High — idiographic and double‑hermeneutic approach | ⚡ High (per case) — deep analysis of small samples | 📊 Detailed individual meaning‑making; ⭐⭐⭐⭐ | Sensitive topics, in‑depth interview studies | Rich, nuanced individual insights; interpretive depth |

| Mixed Methods Integration | 🔄 High — combines qualitative and quantitative workflows | ⚡ High — tools/skills for both qual & quant | 📊 Triangulated, robust findings; ⭐⭐⭐⭐⭐ | Evaluation, market research, comprehensive program analysis | Combines breadth and depth; increases validity through triangulation |

Choosing Your Method and Powering Your Analysis

Navigating the landscape of qualitative data analysis methods can feel overwhelming, but the key isn't to find a single "best" method. The most successful analysis comes from making a deliberate choice: selecting the framework that aligns perfectly with your specific research question, the nature of your data, and the story you want to tell. From the flexible, pattern-seeking nature of Thematic Analysis to the micro-level precision of Conversation Analysis, each approach offers a unique lens for interpreting the rich human experiences captured in your data.

Your journey from raw data to compelling insight hinges on this crucial decision. The methods discussed here, including Grounded Theory, Discourse Analysis, and Narrative Analysis, are not just academic exercises. They are practical toolkits for podcasters trying to understand audience feedback, legal teams dissecting witness testimony, or business leaders evaluating meeting effectiveness. The common thread uniting all these powerful qualitative data analysis methods is the need for a solid, reliable foundation: clean, accurate, and well-structured data.

From Raw Audio to Actionable Insights

The most robust analysis begins long before you start coding or identifying themes. It starts with the quality of your transcript. A flawed or inaccurate transcript can compromise your entire project, introducing errors and hiding the very nuances you're trying to find. This is where modern tools become indispensable, turning the foundational step of transcription into a strategic advantage. An accurate, speaker-identified, and timestamped transcript is the bedrock on which rigorous analysis is built.

As technology continues to evolve, so do the tools we have for making sense of complex data. For those seeking to enhance their analysis, recent advancements show how modern tools are being leveraged, with one researcher successfully demonstrating the power of artificial intelligence in analyzing notes from 50 research conversations. You might find it useful to explore insights into the practical application of AI in analyzing research notes with AI using tools like ChatGPT and NotionAI.

Your Next Step: Empower Your Analysis

Ultimately, the goal is to move beyond simply organizing data to truly understanding it. The right method, applied to high-quality data, empowers you to extract meaningful patterns, construct compelling narratives, and generate insights that drive decisions, inform strategy, or advance knowledge. By mastering these approaches, you equip yourself to find the signal in the noise and translate conversations into conclusions. Your data holds the answers; these methods provide the key to unlocking them.

Ready to lay the foundation for your next project? Before you dive into any of these qualitative data analysis methods, ensure your data is clean, accurate, and ready for scrutiny with meowtxt. Transform your audio and video files into precise, editable transcripts and start your analysis with the confidence that only a high-quality data set can provide. Visit meowtxt to see how seamless transcription can elevate your research.