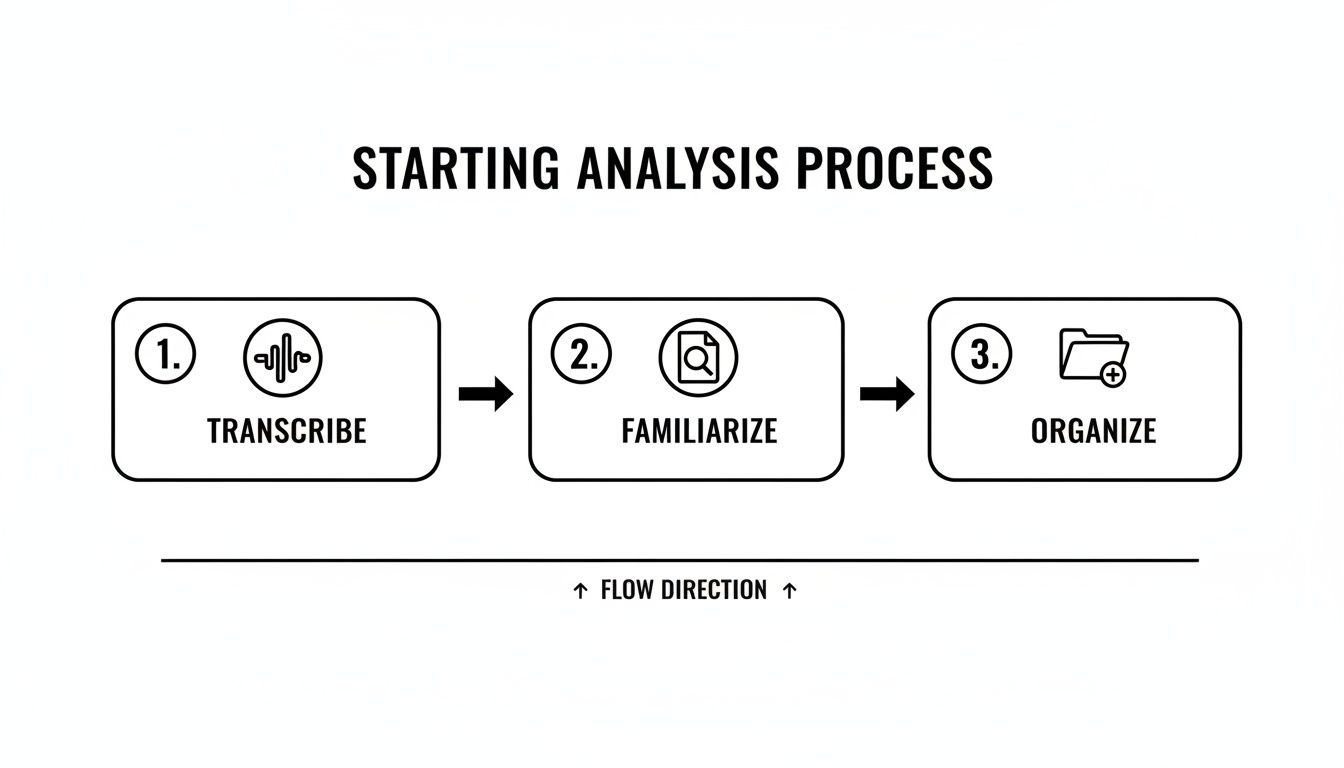

Staring at a folder full of interview transcripts can feel overwhelming. Where do you even begin? This initial phase is all about preparation, turning those raw conversations into organized data before you dive into the deeper analytical work of uncovering insights.

The entire process of analyzing qualitative interview data hinges on having an accurate, readable transcript of each conversation. This isn’t just a simple clerical task; it’s the bedrock for everything that follows. Without a solid transcript, crucial nuances are lost, and the integrity of your findings is compromised. To start on the right foot, it’s worth reviewing a detailed guide on how to properly transcribe an interview.

This initial sequence ensures you build a strong, organized base before diving into the more complex work of interpretation and theme development.

The Power of Familiarization

Before you even think about highlighting text or applying codes, the first real analytical step is familiarization. This means immersing yourself in the data. It's far more than a quick read-through; it's an active process of getting to know your participants' stories and perspectives.

During this stage, I always keep a notebook handy. Jot down initial thoughts, recurring phrases, or surprising comments. These early observations are incredibly valuable and often guide the more structured coding efforts later on.

The goal of familiarization is to let the data speak to you first. By reading and re-reading, you begin to notice the tone, the context, and the unspoken meanings that a surface-level scan would miss.

Streamlining the Groundwork

Not long ago, this preparatory stage was a massive time sink. Manually transcribing a one-hour interview typically required 4–6 hours of work. For a modest study with 20 interviews, that’s 80–120 hours of labor before analysis could even begin.

Today, AI-driven transcription has completely changed the game. These tools can process audio at 20–40× real-time speed with impressive accuracy. A process that once took weeks can now be completed in a matter of days, freeing you up to focus on the much more interesting work of finding meaning in your qualitative data.

Finding Meaning Through Coding And Thematic Analysis

Once you’re truly familiar with your interview transcripts—once you can almost hear the participants' voices as you read—the real journey of discovery begins. This is where you start turning hours of scattered comments and personal stories into a coherent, powerful narrative.

The backbone of this process is coding. Don't let the technical term intimidate you. It's simply the act of tagging or labeling segments of your data to keep things organized.

Think of yourself as a detective sifting through evidence. Every time a participant mentions something that feels significant—a specific pain point, a brilliant idea, a strong emotion—you attach a short label, or "code," to that snippet of text. Mastering this is a foundational skill for anyone learning how to analyze qualitative interview data.

H3: Open Coding vs. Structured Coding

Your coding approach isn't set in stone. The two most common paths are open and structured coding, and your choice really depends on your research objectives.

Open Coding (Inductive): This is a "bottom-up" approach where you let ideas emerge organically from the data itself. You read through your transcripts without a predefined checklist of codes, creating new ones on the fly as you spot interesting patterns. This method is perfect for exploratory research where the findings are unknown.

Structured Coding (Deductive): This is a "top-down" method. You start with a pre-made list of codes based on your research questions or an existing theory. This approach is much more efficient when you're trying to confirm a hypothesis or are looking for specific information within the interviews.

In practice, many researchers (myself included) use a hybrid approach. I'll often start with a handful of structured codes tied to my main questions but remain open to creating new ones whenever unexpected insights appear. It truly offers the best of both worlds.

H3: The Power of Thematic Analysis

Coding is the first crucial step, but the ultimate goal is to identify the big, overarching ideas. This is where thematic analysis excels. It's a hugely popular and flexible method for identifying, analyzing, and reporting patterns—or themes—within qualitative data.

In fact, thematic analysis has become the go-to method for researchers working with interview data. A 2019 review of top social science journals found that over 60% of qualitative articles using interviews relied on this technique.

The process is straightforward: you familiarize yourself with the data, generate initial codes, group those codes into potential themes, and then refine those themes until they tell a clear and compelling story. You're moving from small, specific data points to the broad, meaningful themes that answer your core research questions.

Comparing Coding Approaches

Choosing a coding method can feel daunting, but it really boils down to your research goals. Are you exploring new territory or confirming an existing theory? This table breaks down the common approaches to help you decide.

| Coding Approach | Best For | Example |

|---|---|---|

| Open Coding | Exploratory research where themes are unknown. Generating new theories from scratch. | Reading user feedback with no preconceptions, creating codes like "login frustration" and "confusing UI" as they appear. |

| Structured Coding | Testing a specific hypothesis. Research based on an established theoretical framework. | Analyzing interviews for evidence of a known psychological theory, using pre-defined codes like "Cognitive Dissonance" or "Confirmation Bias." |

| Hybrid Approach | Research with specific questions but also an openness to discovering unexpected insights. | Starting with codes for "Pricing" and "Features" but adding a new code like "Community Belonging" after several participants mention it. |

Ultimately, the best approach is the one that fits your data and your questions. Don't be afraid to start with one method and adapt as you learn more from your interviews.

H3: From Codes to Themes: An Example

Let’s make this concrete. Imagine you’re analyzing customer interviews for a new software app. During your first pass at coding, you might create very specific codes like these:

"Frustrated with login""Confusing dashboard layout""Couldn't find the help button""Too many steps to start"

This process involves highlighting specific phrases in a transcript and assigning short, descriptive codes to them, just like in this screenshot.

Each code captures a single, distinct piece of feedback. It's a close-up, detailed view of the user's experience.

After you've coded a few interviews, you'll start to see patterns emerge. The codes "Frustrated with login," "Confusing dashboard layout," and "Too many steps to start" all seem to point toward the same larger problem. You can group them under a broader theme, perhaps something you call "Onboarding Friction Points."

A code is a label for a single idea. A theme is the story that a group of related codes tells you. Moving from codes to themes is where you transition from simply organizing data to interpreting its meaning.

This thematic grouping is the heart of qualitative analysis. Instead of just giving stakeholders a long list of individual complaints, you can now present a major, evidence-backed finding: "New users struggle with initial setup due to multiple friction points in the onboarding process."

That’s a powerful, actionable insight derived directly from your interview data. If you want to dive deeper into this and other methods, you can explore other qualitative data analysis techniques in our detailed guide.

How Many Interviews Are Actually Enough?

It’s the question every qualitative researcher asks: "Am I done yet?" The good news is, the answer isn’t a wild guess. The bad news? It’s not a magic number, either.

The real answer is a concept called saturation. This is the point where conducting one more interview doesn't teach you anything new. You start hearing the same stories, the same pain points, and the same suggestions on repeat.

Saturation isn't about repetition; it's about confidence. It's that moment you realize your understanding is solid and another interview is unlikely to fundamentally change what you've found.

Knowing when you've hit this point is crucial. It lets you justify your sample size and confidently stop collecting data, saving a massive amount of time and resources. You can then shift your focus to the much deeper work of making sense of the rich data you've already collected.

The Two Types of Saturation You Need to Know

Not all saturation is the same. Understanding the difference between theme saturation and meaning saturation is key to knowing if you've really dug deep enough for your project.

Theme Saturation: Think of this as the surface level. It's when you stop identifying new codes or major themes. For instance, after your eighth interview about user onboarding, you realize no new types of "onboarding friction" are emerging.

Meaning Saturation: This is the real prize. It's when you don't just know what the themes are, but you fully grasp their nuances and complexities. You can explain why that friction exists and how it feels different for various users.

In short, theme saturation tells you the "what." Meaning saturation tells you the "why" and "how."

So, What's the Real Number?

For years, researchers relied on vague rules of thumb. But a 2024 integrative review synthesized 22 years of research to provide some solid, evidence-based targets.

For theme saturation—just hearing the main ideas—most studies hit the mark around 9 individual interviews. But to reach the far more valuable goal of meaning saturation, the review suggests you're looking at something closer to 24 interviews.

For complex projects like building a new theory from the ground up (grounded theory), that number often climbs to 20–30+ interviews. You can dig into the specifics by reading the full research on these qualitative sample size findings.

This data offers a powerful starting point for planning your research.

Matching Your Sample Size to Your Project

The "right" number is completely tied to your goals. A quick usability test is a different beast than a deep-dive academic study.

Let's look at a couple of real-world scenarios:

A UX study on a single feature: Your goal is narrow and tactical. You need to find the biggest usability issues in a new checkout flow. Theme saturation is probably all you need. After 10-12 interviews, you'll likely have a clear list of the top friction points and can confidently move forward.

An academic project on career changes: Here, your goal is broad and exploratory. You want to understand the deep emotional and social drivers behind professional shifts. Meaning saturation is non-negotiable. You’d plan for 25 or more interviews to truly capture the full range of experiences.

Ultimately, the goal isn't just hitting a number. It's about reaching a point of deep understanding. When you can start predicting what your next participant will say and can explain your findings with nuance and evidence, that's when you know you've done enough.

Choosing The Right Tools For Your Analysis

There’s a certain old-school charm to analyzing data with highlighters and sticky notes, I get it. But let’s be real—modern tools can make your process dramatically more efficient and organized. Choosing the right tech isn't about replacing critical thinking; it’s about freeing up your brainpower to focus on interpretation rather than tedious administration.

The right toolkit can completely change how you handle qualitative interview data, especially when you're dealing with more than a handful of conversations. It’s the difference between being a librarian manually cataloging books and having a powerful search engine at your fingertips.

Dedicated Qualitative Analysis Software

For any project with a significant amount of data, dedicated Computer-Assisted Qualitative Data Analysis Software (CAQDAS) is a game-changer. Tools like NVivo, MAXQDA, or Dedoose are built from the ground up for this kind of work. They provide a structured environment to manage dozens of transcripts, code systematically, and actually see the connections between your themes.

Think of CAQDAS as your central command center. It helps you:

- Stay Organized: Keep all your transcripts, codes, memos, and notes in one searchable place. No more lost files or scattered notes.

- Code Systematically: Easily apply, review, and merge codes across multiple interviews. This is crucial for ensuring consistency.

- Visualize Connections: Use built-in tools to create charts and maps that show how different themes relate to each other. This is often where the "aha!" moments happen.

- Collaborate with Teams: Many platforms support team-based projects, so multiple researchers can code the same dataset while tracking everyone's changes.

This structured approach helps you maintain rigor and transparency, especially in complex studies where you need to document every analytical decision you make.

The Most Important Tool: The Transcription Game-Changer

Before you even think about coding, you must face the biggest bottleneck in the entire analysis process: transcription. Manually typing out interviews is incredibly time-consuming. It’s a motivation-killer that can drain your energy before the real work even begins. This is where AI-powered services have become absolutely indispensable.

Modern transcription tools have effectively eliminated the most tedious part of qualitative research. By automating the conversion of audio to text, they free you to spend your time finding meaning, not just typing words.

These services deliver fast, accurate, and, most importantly, searchable transcripts. They often include automatic speaker labeling and timestamps, which are invaluable for quickly locating key quotes. Honestly, integrating this tech into your workflow is one of the single biggest efficiency gains you can make. If you want to compare different options, our guide to the best transcription software for interviews is a great place to start.

Building Your Technology Stack

The modern toolkit for analyzing interviews isn’t about finding one single piece of software that does everything. It’s about creating a smart workflow that fits your project. For instance, you might collect initial feedback through online forms before diving into deeper interviews. When you're thinking about the tech for your project, checking out a comprehensive survey software comparison can help you find the right tools for collecting data that you'll later analyze qualitatively.

A common and highly effective workflow looks something like this:

- Record: Capture high-quality audio of your interviews. Good audio is the foundation for everything else.

- Transcribe: Use an AI service to quickly turn those audio files into accurate, speaker-labeled text documents.

- Analyze: Import the clean transcripts into a CAQDAS platform for deep coding and thematic analysis.

By automating the transcription phase, you save dozens of hours, which lets you dive straight into the high-value work of interpretation. This makes it way easier to manage large projects, collaborate with your team, and ultimately, produce more insightful findings from your qualitative interview data.

Making Sure Your Analysis Is Trustworthy

A great analysis isn't just about finding interesting patterns; it's about proving your findings are solid and not just a reflection of what you wanted to see. This stage is all about building in checks and balances to ensure credibility.

Ultimately, these practices make your conclusions rigorous, defensible, and trustworthy. If you skip them, you risk presenting a skewed story. Nail them, and your work will have a much bigger impact, whether you're presenting to academics or company stakeholders.

Acknowledging Your Own Biases With Reflexivity

Every researcher brings their own experiences and perspectives to the table. It’s impossible not to. The trick isn't to pretend you're a perfectly neutral robot, but to actively recognize and manage your own perspective. This practice is called reflexivity.

The easiest way to do this is to keep a research journal or memo. As you go through your analysis, jot down your thoughts, gut feelings, and any assumptions that pop into your head.

Ask yourself questions like:

- Why did that one quote jump out at me so much?

- Is my own background coloring how I'm interpreting this person's story?

- Am I ignoring data that doesn't fit my initial hunch?

This simple habit creates a transparent audit trail of your thought process. It shows you've been critical of your own influence on the results, which seriously boosts the credibility of your work. If you bring AI into the mix for analysis, it’s also on you to be just as rigorous by understanding tactics for reducing hallucinations in LLMs to keep that trust intact.

Building Consistency With Inter-Coder Reliability

If you’re working on a team, how do you make sure "frustration" means the same thing to you as it does to your colleague? This is where inter-coder reliability comes in. It’s a formal term for a simple process: have multiple researchers code the same transcript independently, and then compare your work.

The point isn't to hit a perfect 100% match. The real value is in the discussion about why your interpretations differed. Those conversations are gold for sharpening your codebook and making sure everyone on the team is on the same page.

Inter-coder reliability isn't just a box to check. It’s a collaborative gut-check that forces you to clarify definitions and standardize your approach, leading to findings that are much more consistent and reliable.

Strengthening Findings Through Triangulation and Member Checking

Two final techniques will really level up the credibility of your analysis when you're figuring out how to analyze qualitative interview data.

First is triangulation. This just means using different data sources to see if they point to the same conclusion. For instance, you could compare what people said in interviews with your own observations from a field study or even results from a survey. If multiple, different sources are all telling you the same thing, your argument becomes much stronger.

Second is member checking, sometimes called respondent validation. This is where you take your preliminary findings back to the people you interviewed. You can ask them simple questions like, "Does this summary feel right to you?" or "Is this a fair take on what you told me?"

Their feedback is an incredible reality check. They don’t get the final say on your analysis, but this step helps ensure your interpretations actually make sense to the people whose experiences you’re trying to understand. Applying these strategies will transform your analysis from a simple summary into a robust, evidence-backed story.

Presenting Your Findings To Tell A Compelling Story

You’ve done the heavy lifting—the transcribing, coding, and checking your work. Now it's time to share what you discovered in a way that resonates with your audience. This is the final, crucial step in learning how to analyze qualitative interview data effectively.

Nobody wants to read a dry summary of your data. The goal is to deliver a story that sticks. Think of yourself as a storyteller: your themes are the chapters, and the quotes you’ve collected are the vivid dialogue that brings the narrative to life. A clear structure is your best friend here.

Crafting Your Narrative Structure

A simple, repeatable structure for your report or presentation makes all the difference. For each key theme you’ve identified, you want to guide your audience through a logical flow that builds a strong, evidence-based case. It’s all about making your insights impossible to ignore.

Here’s a practical framework I use for presenting each theme:

- State the theme clearly. Start with a single, punchy sentence. For example, "A primary barrier for new users was a deep-seated feeling of 'tech anxiety' during the initial setup process."

- Explain why it matters. Briefly unpack what this theme means in the context of your research. Why should anyone care about 'tech anxiety'? What's the impact?

- Show, don't just tell. This is where you bring in the voices of your participants. Back up your explanation with a couple of powerful, well-chosen quotes from your interviews.

This simple claim-significance-evidence pattern transforms a list of findings into a persuasive story that is easy for any audience to follow.

Choosing Quotes That Resonate

Not all quotes are created equal. The ones you select should do more than just prove a point; they need to add color, emotion, and authenticity. A good quote gives your audience a direct window into the participant's world.

When you're digging through your transcripts, look for quotes that are:

- Illustrative: They perfectly and concisely capture the essence of the theme.

- Articulate: The participant expressed the idea in a particularly clear or memorable way.

- Humanizing: They convey an emotion or a personal experience that helps the audience connect on a human level.

Pro Tip: Avoid using long, rambling quotes. A single, well-chosen sentence often has far more impact than a whole paragraph. Edit ruthlessly to find that golden nugget.

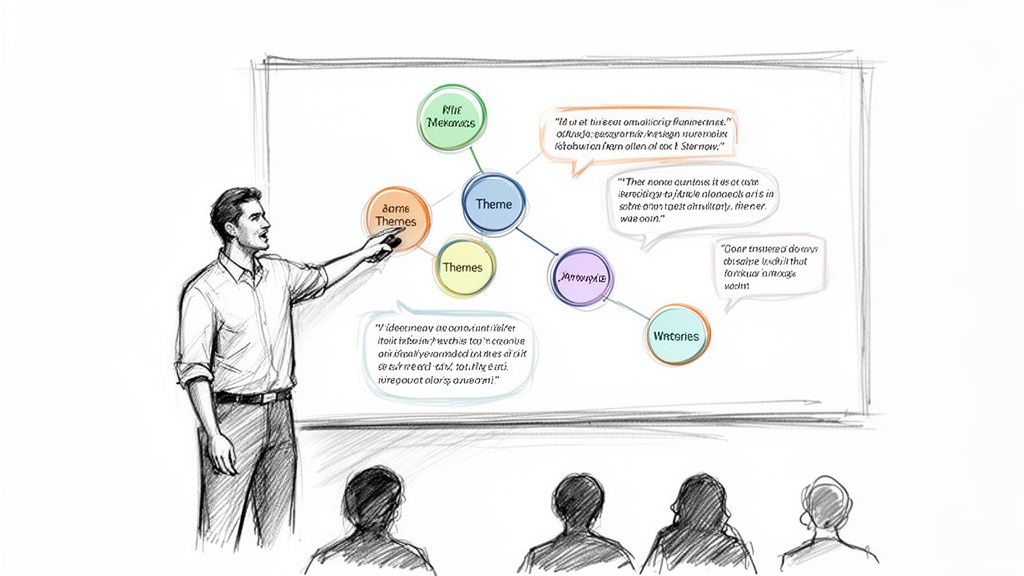

Using Visuals to Enhance Clarity

Finally, don't underestimate the power of simple visuals. You don't need to be a graphic designer to create visuals that make your findings easier to digest.

A simple theme map—a diagram showing your main themes and how they connect to smaller sub-themes—can provide a fantastic one-page overview of your entire analysis. This gives your audience the big picture before you dive into the details of each theme, making your compelling story even more memorable.

Still Have Questions? Let's Clear a Few Things Up

Diving into qualitative analysis always brings up a few common questions, especially for those new to the process. Let's tackle some of the most frequent ones I hear.

What’s the Difference Between a Theme and a Code?

It's easy to mix these two up, but the distinction is quite simple when you think about it practically.

Think of codes as the small, sticky-note labels you apply to individual snippets of your interview data. They're short, descriptive tags for a specific idea, like a single sentence or phrase. For example, you might apply the code 'high price' to a quote where a participant complains about cost.

A theme is what you discover after you’ve finished coding. It's the bigger, more meaningful pattern that emerges when you group a bunch of related codes together. After finding several codes like 'high price,' 'no free trial,' and 'expensive subscription,' you might group them to form the theme, 'Cost as a Barrier to Entry.' In essence, codes are the bricks; themes are the walls you build with them.

Can I Use Quantitative Data With My Interview Analysis?

Not only can you, but you absolutely should if you have the opportunity. This is called a mixed-methods approach, and it’s a powerhouse for generating really solid, convincing insights.

For instance, you might have survey data showing that 70% of users abandon their shopping cart—that’s the 'what'. Your interviews can then uncover the stories behind that number—the 'why'. Combining them tells a much richer, more complete story.

Combining numerical data with personal stories provides a powerful one-two punch. The numbers show the scale of an issue, while the interviews provide the human context that makes the data unforgettable.

How Do I Handle Conflicting Information From Different Interviewees?

First off, don't panic. Contradictory views aren't a sign of flawed data; they're a goldmine. This is where the really interesting insights often lie.

Instead of trying to figure out who is "right," your job is to explore the tension between the different viewpoints. This disagreement often points to a much more complex and nuanced reality than you first assumed.

Ask yourself why their perspectives might differ. Is it because of:

- Their unique roles in a company?

- How long they've used a product?

- Different personal backgrounds or pain points?

Investigating these conflicts is a core part of learning how to analyze qualitative interview data. It prevents you from oversimplifying your findings and helps you capture the true complexity of the topic. See contradictions as an invitation to dig deeper.

The first step to powerful analysis is a clean transcript. At meowtxt, we turn your audio and video into accurate, editable text in minutes, so you can stop typing and start finding insights. Try meowtxt for free today!