Qualitative data analysis isn't some obscure academic art. It’s a crucial set of methods for understanding non-numerical information—like interview transcripts, open-ended survey answers, or detailed observation notes. The goal of using qualitative data analysis techniques is to move beyond numbers and dig into the why and how behind human behavior. It's about transforming a messy pile of unstructured data into clear, actionable insights by spotting the patterns that truly matter.

From Raw Qualitative Data to Rich Insights

Let’s be honest. Staring at pages of interview transcripts or a mountain of customer feedback can feel overwhelming. The real work isn’t just collecting qualitative data; it's unlocking the human stories buried within it. This guide will demystify the process of qualitative analysis. Think of it less like a rigid set of academic rules and more like a powerful toolkit for understanding the context that quantitative data alone can never provide.

Here’s a practical example: quantitative data might tell you that 75% of users abandoned their shopping cart. That's a problem, but it's a faceless one.

Qualitative data, analyzed using the right techniques, tells you why they left. It captures their frustration in their own words: "I couldn't find the guest checkout button," or "The shipping costs were a total shock." Now you have a specific problem you can fix.

We'll walk you through the proven qualitative data analysis techniques that transform unstructured text, audio, and video into insights that are impossible to ignore. Mastering these methods is a game-changer for anyone in research, marketing, or product development looking to perform effective qualitative analysis.

The Bottom Line: Qualitative analysis focuses on interpreting meaning, understanding lived experiences, and identifying patterns in non-numerical data. It provides the depth and context that quantitative data just can't touch, making it an essential part of any comprehensive research strategy.

A Snapshot of Qualitative Analysis Techniques

To give you a quick overview, here’s a table comparing the most common qualitative data analysis techniques we’ll be covering. Think of this as your cheat sheet for choosing the right tool for the job.

| Technique | Primary Goal | Best Used For |

|---|---|---|

| Thematic Analysis | Identifying and analyzing patterns or "themes" across a qualitative dataset. | Open-ended surveys, interview transcripts, user feedback. |

| Content Analysis | Quantifying the presence and frequency of specific words or concepts in data. | Social media posts, customer reviews, news articles. |

| Narrative Analysis | Examining how people construct stories to make sense of their experiences. | In-depth interviews, case studies, personal journals. |

| Grounded Theory | Developing new theories based directly on the qualitative data collected. | Exploratory research where little is known about a topic. |

| Discourse Analysis | Studying how language is used in social contexts and to signal power dynamics. | Political speeches, marketing copy, workplace communication. |

Each of these techniques offers a different lens for viewing your data, helping you build a richer, more complete picture of what's really happening.

The Journey of Qualitative Analysis

To truly master today's qualitative data analysis techniques, it helps to understand their origins. The tools we have now are the product of decades of innovation aimed at finding better, more reliable ways to make sense of messy human stories. Imagine a researcher's office before computers: no software, just piles of paper, scissors, and colored pens. This was the 'cut-and-paste' era, where analysts would physically snip excerpts from interview transcripts and group them to spot recurring themes.

This manual process formed the bedrock of what we now call coding. It was effective for small projects but incredibly slow and difficult to scale. This limitation drove the demand for more structured, rigorous methods, leading to breakthroughs like grounded theory in the 1960s, which provided a formal framework for building theories directly from data.

The Technological Turning Point in Qualitative Analysis

The real revolution in qualitative data analysis began in the 1980s with the rise of personal computers. This marked the dawn of Computer-Assisted Qualitative Data Analysis Software (CAQDAS). Suddenly, the physical world of paper and scissors gave way to a digital one, opening up a new frontier for research.

Interestingly, some of the earliest adopters were biblical scholars who needed a way to manage and cross-reference enormous ancient texts. They pioneered the use of mainframe computers for analyzing non-numeric data, paving the way for the tools we use today. The switch from manual to digital wasn't just about speed; it changed the very DNA of qualitative research.

The evolution was swift. Before the 1960s, analysis was manual. By the 1980s, CAQDAS arrived, making qualitative analysis more systematic and scalable. By the mid-90s, software for coding, memoing, and data retrieval was a global standard. This shift is clear in the numbers: there was a 40% jump in published qualitative studies using software between 1990 and 2000. You can explore a detailed timeline of CAQDAS development to see its significant impact.

How CAQDAS Changed the Game

The arrival of CAQDAS fundamentally changed how researchers could engage with their data, introducing several key improvements that are now standard in qualitative data analysis techniques.

- Handling Scale and Complexity: Researchers could finally tackle massive datasets—like hundreds of interviews or thousands of open-ended survey answers—without being buried in paper.

- Systematic Coding: Digital tools brought order to the chaos. Analysts could easily apply codes, search for keywords, and visualize how themes connect across the entire dataset.

- Enhanced Transparency: Software creates a built-in audit trail. Every decision in the qualitative analysis process, from the first code to the final theme, is documented, making it more transparent and defensible.

- Team Collaboration: Digital projects can be easily shared, allowing entire teams to work on the same dataset. This streamlines collaboration and helps ensure inter-coder reliability.

Key Takeaway: The leap from manual methods to CAQDAS was a methodological revolution. It empowered researchers to conduct larger, more rigorous, and more transparent qualitative studies, solidifying the importance of qualitative data analysis techniques.

This history matters because today's powerful qualitative data analysis techniques are built on decades of innovation. Understanding this journey reinforces the core principles—rigor, systematic thinking, and transparency—that are essential for telling meaningful stories with data.

Your Guide to Core Analysis Techniques

Now that we’ve covered the why, let’s dive into the how of qualitative data analysis. This is where you transform raw data into a compelling narrative. However, choosing the right technique is critical. Think of it like a mechanic's toolbox: you wouldn't use a wrench to hammer a nail. Similarly, the qualitative data analysis techniques you select must align with your research question and data type.

Thematic Analysis: Finding the Patterns

If you're new to qualitative analysis, thematic analysis is your best friend. It’s one of the most flexible and widely used methods. The primary goal is to identify, analyze, and report on patterns—or "themes"—that recur across your dataset. This technique is perfect for making sense of interview transcripts or open-ended survey responses.

Imagine you just interviewed 20 customers about your new mobile app. Using thematic analysis, you would read through every conversation and spot recurring ideas. You'll start to see phrases like "confusing navigation," "slow loading times," or "helpful customer support" emerge. These are your themes, providing a structured picture of what matters to your users. The focus is on capturing meaning, not just counting words.

Content Analysis: Counting the Concepts

While thematic analysis is about meaning, content analysis is more systematic and often incorporates quantitative elements. With this technique, you categorize qualitative data and then count how often certain words, ideas, or concepts appear. It's an excellent way to understand the dominant topics in a large volume of text, such as social media comments or customer reviews.

For instance, a marketing team could use content analysis to sift through 1,000 online reviews. They might create categories such as:

- Positive Feedback: (e.g., "love it," "easy to use," "great value")

- Negative Feedback: (e.g., "broke quickly," "too expensive," "poor quality")

- Feature Requests: (e.g., "wish it had," "needs a...," "add an option for")

By tallying mentions in each category, the team can quickly quantify public sentiment. This approach turns subjective comments into measurable data points, offering clear evidence of what’s working and what isn’t.

Narrative Analysis: Understanding the Story

Humans are natural storytellers. Narrative analysis leverages this by focusing on how people construct stories to make sense of their lives. Instead of breaking data into themes, this method examines the entire narrative—the plot, characters, and setting. It’s ideal for in-depth interviews or case studies where individuals share detailed personal histories.

A researcher studying career changes might use this technique to understand an individual's professional journey. They would examine how the person frames their story: What was the turning point? Who were the key figures? This qualitative data analysis technique provides rich insights into how people perceive and interpret their world.

By focusing on story structure, narrative analysis helps you understand not just what happened, but how people give meaning to those events.

Discourse Analysis: Exploring Language and Context

Discourse analysis takes qualitative inquiry a step further. It looks beyond what is said to examine how it's said. This technique analyzes language within its social context, revealing how words build meaning, signal power, and influence others. It's a powerful tool for dissecting everything from political speeches and marketing copy to everyday workplace communication.

For example, a discourse analyst could examine a company's job descriptions, analyzing word choice for hidden biases. Does the ad use masculine-coded terms like "dominant," which might discourage female applicants? This method uncovers the subtle social dynamics woven into our communication.

Grounded Theory: Building Theory from Data

Most research methods start with a theory to test. Grounded theory reverses this. It’s a bottom-up approach where you start with only the data and build a new theory that is "grounded" in your findings. This exploratory technique is ideal for investigating topics where little prior research exists. You systematically collect and analyze data, allowing the theory to emerge organically.

This rigorous approach was developed in the mid-20th century as researchers sought to make qualitative methods as respected as quantitative ones. Pioneered by Glaser and Strauss in 1967, their systematic coding process has influenced over 60% of qualitative research in health and social sciences since the 1970s. You can see how these milestones created a standardized field of study to learn more.

Each of these core qualitative data analysis techniques offers a unique path from raw information to smart decisions. For more guides on research and analysis, check out our other posts on the MeowTXT blog.

How to Choose the Right Analysis Method

Choosing the right analysis method is a critical decision in qualitative research. The wrong choice can lead to wasted time and weak conclusions. Think of it like choosing between a microscope and a telescope; the tool must fit the job.

How do you choose? It boils down to asking 3 core questions:

- What is my primary research question?

- What type of qualitative data have I collected?

- What is my end goal—to explore, explain, or quantify?

Aligning your qualitative data analysis techniques with these three factors is the key to a successful study.

Aligning Method With Your Research Goal

First, be clear about what you're trying to achieve. Are you exploring a new area to discover unknown user motivations? Or are you trying to organize customer feedback by counting specific complaints? Your goal should guide your choice of technique.

For pure discovery—understanding the "why" behind user behaviors—thematic analysis is a flexible and powerful starting point. It allows themes to emerge naturally from the data.

But if your goal is more systematic, like categorizing and counting how many times "poor customer service" appears in 500 reviews, content analysis is a much better fit. It’s designed to turn unstructured text into quantifiable data.

Matching Technique to Data Type

The format of your data also influences your choice of method. Do you have a few long, story-rich interviews, or thousands of short, open-ended survey responses?

Different qualitative data analysis techniques are optimized for different types of data.

Narrative analysis, for instance, is perfect for those long interviews filled with personal stories. It’s designed to unpack the structure and plot of a person's account. Trying to use it on one-sentence survey responses would be ineffective.

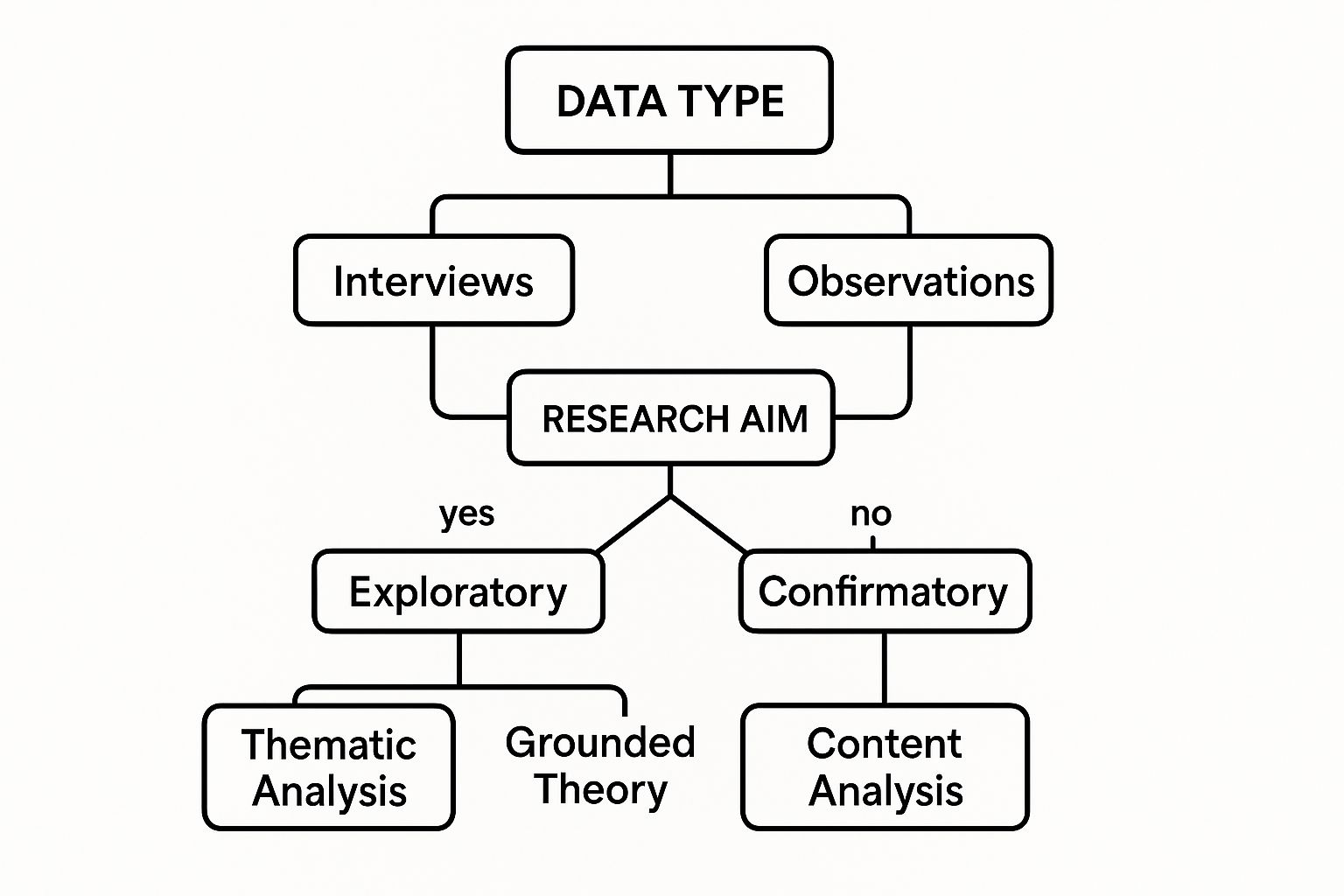

This chart breaks down the decision-making process for choosing a qualitative data analysis technique.

As you can see, letting your data and goals lead the way makes the choice clearer. Remember, all your analysis activities should follow your organization's data handling policies, like those outlined in our terms of service.

Selecting Your Qualitative Analysis Method

To make this crystal clear, here’s a quick-glance table comparing the most common qualitative techniques. Use it to see which approach lines up best with your project's specific needs.

| Technique | Best For (Research Goal) | Common Data Sources | Analysis Focus |

|---|---|---|---|

| Thematic Analysis | Exploring patterns and identifying common themes across a dataset. | Interviews, focus groups, open-ended surveys. | Identifying the underlying meaning and recurring ideas. |

| Content Analysis | Quantifying the frequency of specific words, concepts, or categories. | Social media posts, customer reviews, news articles. | Counting and categorizing content in a systematic way. |

| Narrative Analysis | Understanding how people construct stories and make sense of experiences. | In-depth interviews, case studies, personal biographies. | Examining the structure, plot, and characters of stories. |

| Discourse Analysis | Studying how language is used in its social and power context. | Political speeches, advertisements, workplace communication. | Analyzing language beyond the literal words to uncover meaning. |

| Grounded Theory | Developing a new theory directly from the data without preconceived ideas. | Exploratory research, long-term ethnographic studies. | Generating a theory that emerges from systematic data collection. |

Ultimately, choosing a method isn't about finding the single "best" one. It's about finding the right one for your specific project. By aligning your question, data, and goals, you can confidently select the best qualitative data analysis techniques to build a bridge from raw data to real understanding.

Let's get real for a moment. Theories and abstract ideas are great, but seeing qualitative data analysis techniques actually work in the real world? That’s when the lightbulb really goes on. We're going to step away from the hypothetical and look at a powerful example from healthcare where these methods literally save lives: retrospective case note review.

This isn't some dusty academic exercise. It's a hands-on qualitative method used in hospitals worldwide to dig into patient records, figure out what went wrong, and make sure it doesn't happen again. Picture a team of doctors, nurses, and administrators looking over patient files—not as a series of checkboxes, but as individual stories. Their job is to apply their expert judgment to these records to find critical incidents that raw numbers would simply glide over.

The Power of Collaborative Review

At its heart, retrospective case note review is like having a structured conversation with the past. It brings a multi-disciplinary team together to assemble the complete picture of a patient's experience.

It usually unfolds in layers:

- First, non-clinical staff might do an initial pass, pulling out basic facts like admission dates and diagnoses.

- Next, nurses add their perspective, giving context on how care was delivered and what patient interactions were like.

- Finally, physicians make the crucial judgment call, evaluating the clinical care and determining if a preventable error occurred.

This tiered approach is a perfect illustration of qualitative analysis in motion. It takes a flat patient file and turns it into a rich, multi-dimensional dataset, revealing subtleties about communication gaps, system-wide flaws, and the thinking behind key decisions. It's all about finding the "why" behind an event, not just the "what."

This technique puts a spotlight on the human element inside a very complex machine. It recognizes that patient outcomes are shaped by countless tiny decisions and interactions—the kind of stuff that's completely invisible to a spreadsheet.

Retrospective case note review is a cornerstone of quality improvement in medicine. It grew out of the landmark Harvard Medical Practice Study back in the early 1990s and has been the backbone of major studies on healthcare harm for over 25 years. In huge systems like the UK's National Health Service (NHS), these reviews can cover thousands of records. Studies have found adverse event rates between 4% and 17% of hospital admissions, proving just how vital this method is for understanding and measuring patient safety problems. You can see how this method is used to measure healthcare harm and fuel real change.

What the Data Looks Like

Seeing how this is structured makes it all click. This screenshot from an NHS report lays out the framework for reviewing patient notes.

This image maps out the different review stages, from the first screen to a deep dive by clinical experts. It shows you this isn't just a random hunt for mistakes; it's a systematic, multi-stage process built to make the findings as rigorous and reliable as possible.

This real-world example shows that qualitative data analysis techniques are so much more than academic jargon. When you apply them with a clear purpose, they deliver concrete, life-saving insights. The story of retrospective case note review is proof that by digging into the narrative hidden within the data, we can spot critical failures and build safer, better systems for all of us. It's a powerful reminder that sometimes, the most important piece of data isn't a number—it's a story waiting to be understood.

How to Make Sure Your Qualitative Analysis Is Trustworthy

Let's be honest: without rigor, qualitative analysis can feel a bit... subjective. Your most amazing insights risk being shrugged off unless you can prove they’re built on solid ground.

So, how do you make your findings robust, defensible, and genuinely trustworthy? It's not about memorizing rules from a textbook. It’s about building a transparent process that anyone can follow.

The single most important practice here is creating a detailed audit trail. Think of it as leaving a clear trail of breadcrumbs showing exactly how you moved from raw interview transcripts to your final conclusions. Every single decision—why you coded a phrase a certain way, how you spotted an emerging theme—needs to be documented.

Build in Transparency and Consistency

So how do you actually build this trail? The simplest and most effective tool is the humble researcher memo.

These are just notes you write to yourself as you work through the data. They capture your thought process in real-time—your questions, your "aha!" moments, and your analytical dead-ends. Memos make your intellectual journey visible and easy for others (and your future self) to follow.

Another key practice is reflexivity. This is just a fancy word for being brutally honest with yourself about your own biases. We all have them. Our personal histories and beliefs can subtly color how we interpret what people say. Reflexivity is the active, ongoing process of checking those assumptions at the door so they don't hijack your findings.

By documenting your decisions in memos and actively reflecting on your own biases, you transform your analysis. It stops being a "black box" of personal opinion and becomes a systematic, defensible process. This is what separates amateur work from high-quality, respected research.

These practices help shore up your work from the inside, but what about checking your findings with the outside world? Specifically, the people who gave you the data in the first place?

Validate Your Ideas and Lock in Reliability

One of the most powerful ways to do this is with member checking, sometimes called participant validation. It’s a simple idea: you take your initial findings back to the people you interviewed.

You're essentially asking, "Does this ring true to you? Does my interpretation actually capture your experience?" This gives participants a chance to correct misunderstandings or add crucial context, making your analysis infinitely stronger.

And if you’re working with a team? You absolutely must ensure everyone is interpreting the data in the same way. That's where inter-coder reliability comes in. This process involves having two or more researchers independently code the same chunk of data. Afterwards, you compare notes. A high level of agreement between coders proves your coding scheme is solid and not just one person's quirky interpretation.

Of course, all data handling and validation must be done with the utmost care for participant information. You can read more about our approach in our privacy policy.

By weaving these essential qualitative data analysis techniques into your workflow—audit trails, reflexivity, member checking, and inter-coder reliability—you sidestep the most common pitfalls. You'll produce work that isn’t just insightful, but is built to withstand scrutiny.

Common Questions About Qualitative Analysis

When you first dip your toes into the world of qualitative data analysis, a few common questions always seem to pop up. Let's tackle them head-on, so you can move forward with confidence and get the most out of your research.

How Is Qualitative Different from Quantitative Analysis?

Think of it this way: quantitative analysis is all about the "what." It deals with numbers and stats to measure things, test hypotheses, and calculate averages. It's objective and structured.

Qualitative analysis, on the other hand, is about the "why" and the "how." Here, you’re diving into non-numerical data—like interview transcripts, open-ended survey responses, or observation notes—to understand people's experiences, motivations, and perceptions in their own words. The various qualitative data analysis techniques are designed to explore these depths.

Do I Need Special Software for This?

Honestly, for a small project with just a few interviews, you can probably get by with a simple spreadsheet or word processor. But once your dataset gets bigger, specialized software becomes a lifesaver.

Tools known as CAQDAS (Computer-Assisted Qualitative Data Analysis Software), like NVivo or ATLAS.ti, are built for this. They make organizing, coding, and retrieving your data much more systematic and efficient. You’ll save a ton of time and seriously cut down on the risk of human error.

The right software doesn't just speed up your work; it enhances the rigor of your analysis by helping you maintain a clear audit trail and manage complex datasets with ease.

What’s the Best Way to Manage a Lot of Data?

Getting swamped by data is a real risk. The key is to be systematic from the very beginning. Before you even start coding, create a clear framework or plan for how you’ll approach it.

Break the analysis down into manageable phases, and use software to keep your files and codes neatly organized. I also highly recommend getting into the habit of writing regular memos. These are just short, reflective notes where you jot down your thoughts, track decisions, and connect ideas. It’s a simple practice that keeps you from getting lost in the weeds.

What Are Some Common Mistakes to Avoid?

It’s easy to stumble when you're new to qualitative analysis. Here are a few classic pitfalls to watch out for:

- Forcing the data: Don't try to make your data fit a theory you already have. Let the insights emerge naturally from what people are telling you.

- Ignoring your own bias: We all have biases. The trick is to acknowledge them through a practice called reflexivity, where you constantly question your own assumptions.

- "Quote-and-run": A common mistake is just dropping quotes into a report without explaining why they're important. Your job is to analyze, not just present raw data.

- Over-generalizing: Be careful not to make sweeping claims based on a very small sample size. Rigorous qualitative data analysis techniques are your best defense against these issues.

Need to transcribe your interviews or focus groups for analysis? MeowTXT converts your audio and video files into accurate, editable text in minutes. Get started with 15 minutes free at https://www.meowtxt.com.